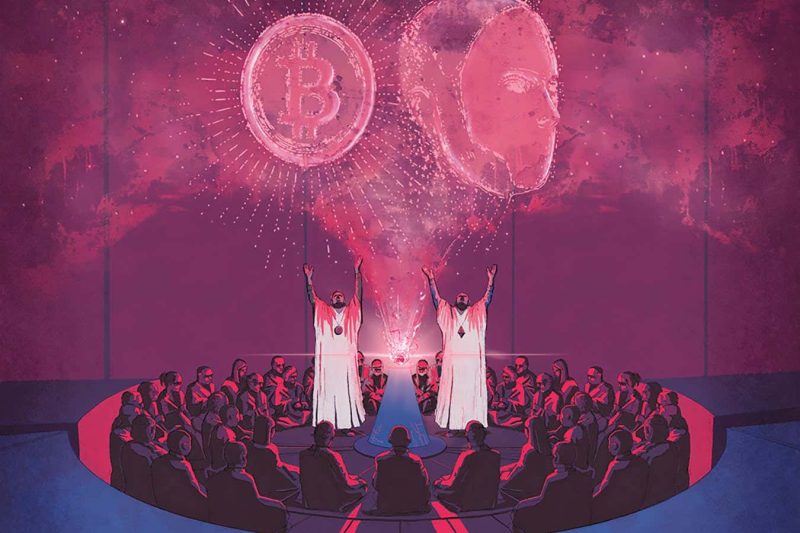

THIS was the 12 months when two of Silicon Valley’s greatest hype blimps – cryptocurrency and synthetic intelligence – have been deflated by drama. First got here the downfall of Sam Bankman-Fried, whose shady cryptocurrency empire landed him in court docket, the place he was convicted of fraud and conspiracy. Throughout his trial, witnesses and proof revealed that Bankman-Fried’s cryptocurrency alternate FTX was siphoning billions of {dollars} from unwitting buyers into one in every of his different belongings, a cryptocurrency buying and selling agency referred to as Alameda Analysis.

A couple of weeks later, the opposite Silicon Valley Sam – Sam Altman – went by a company melodrama. Altman is CEO of OpenAI, maker of ChatGPT and one of many world’s most profitable AI start-ups. In late November, the board of OpenAI claimed, slightly mysteriously, that Altman wasn’t “persistently candid” with it and abruptly fired him. Stung, Altman unexpectedly organized a deal to arrange his personal analysis division at Microsoft.

When most of OpenAI’s 700+ workers threatened to defect with Altman to Microsoft, he was reinstated at OpenAI and the board was overhauled. There may be nonetheless no official story about why all of it occurred, however let’s simply say Altman had a very unhealthy week the place he nearly misplaced his billion-dollar child.

Except for their first names and billionaire drama, they don’t have anything in widespread – apart from their affiliation with a trendy type of philanthropy referred to as efficient altruism (EA).

Popularised by the thinker William MacAskill, EA has many adherents in Silicon Valley. They love its directive of “earn to offer”, which suggests that individuals ought to rake in as a lot cash as doable in an effort to donate a portion of it to “optimum” causes. Most of these causes are associated to AI and high-tech doomsday prepping, and are meant to profit humanity within the extraordinarily long run, centuries from now – a philanthropic stance referred to as longtermism.

Certainly, many EA adherents imagine the best type of philanthropy shouldn’t concentrate on as we speak’s victims of poverty, homelessness and warfare, however on entrepreneurs who promise to make AI pleasant in the direction of people.

Bankman-Fried served on the board of MacAskill’s organisation, the Centre for Efficient Altruism, and gave thousands and thousands of {dollars} to EA causes. Altman appointed a number of EA sympathisers to his board, together with pc scientist Ilya Sutskever, who has stated in various locations that he believes OpenAI is on the cusp of growing synthetic common intelligence, or a human-equivalent thoughts so highly effective that it would represent an existential danger to humanity (see “The way forward for AI: The 5 doable eventualities, from utopia to extinction”).

Being affiliated with EA seemed that the work the Sams have been doing had the next objective. They have been constructing a greater future, the place work and cash could be completely reworked by expertise. Plus, they have been saving humanity!

However when push got here to shove, it seems a few of these beliefs took a little bit of a again seat. Chatting with a journalist throughout his trial, Bankman-Fried stated that his funding in EA was partly “dumb shit” he stated to make himself appear moral. For his half, Altman claimed to care about grave existential dangers brought on by OpenAI tasks. However on the similar time, he was deploying and promoting an untested expertise he himself had referred to as probably harmful – a transfer many efficient altruists view as irresponsible. Maybe the dedication of each males to EA was extra about phrases than deeds.

Silicon Valley actually is a capitalist Thunderdome, the place two Sams enter and one Sam leaves. Sadly, the losers are all of us within the crowd, cheering them on.

Matters: